In the 1960's, aviation organizations called for a uniform system to understand and communicate weather conditions. This led to the development of METAR, a basic code format that can be transmitted quickly and understood universally.

This codified form is still used today. For example, the weather in Paris, France now is:

METAR LFPG 120600Z 17003KT 8000 RA FEW006TCU SCT040 BKN100 13/12 Q1011 TEMPO BKN006

Nearly every novice pilot asks "why can't this just be in plain language?"

True enough, most flight planning applications will translate the code into readable text.

However, seasoned pilots (along with air traffic controllers and other aviation professionals) consistently prefer the coded format. It's concise (a single line versus an entire paragraph), structured in a standardized way (you know precisely where to find any specific information you need), and it leaves no room for misinterpretation with its clear definitions.

Asking second-order questions to an LLM and hoping it will translate to a working SQL query is naive. Same with any programming language actually. What we lack in the current analytics motion is a language expressing fully our semantics. SQL is the lingua-franca for databases. Natural language is good for instructions and probing. What's the in-between? The space where we can manipulate, extend and transform our analytics concepts?

Basically, where is our analytics mathematic?

Mathematic without formalism

Kids often ask why they have to learn mathematics. What's the point of learning times tables if the calculator can do it faster and better?

As we grow, we understand two things about mathematics:

It brings mental models and patterns that help resolve anything.

Its semantics and syntax are top-notch. One of the best semantic engine humans have created.

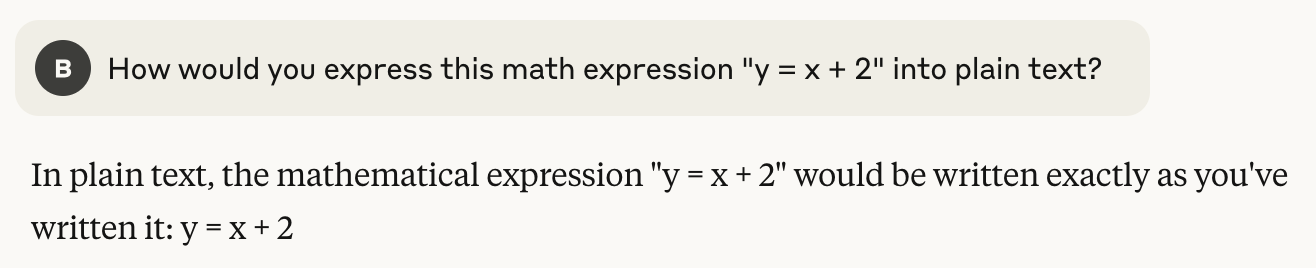

I mean, which formulation do you prefer1?

"For any given value of x, the corresponding value of y is found by adding 2 to x."

"The value of y is always 2 more than the value of x."

“y = x + 2”

The mathematical expression isn't just shorter—it's transformative. It allows manipulation, extension, and application impossible with prose descriptions.

The theory behind today's AI revolution is fundamentally mathematical. Every breakthrough from Newton's calculus to Einstein's relativity to modern machine learning happened not just because of ideas, but because mathematical symbolism gave those ideas wings. Without formal mathematical language, these concepts would have remained trapped in verbose, imprecise descriptions.

Also, when we discover the perfect syntax to express complex semantics, we don't just describe the world differently - we see it differently.

Analytics with formalism

I recently played with MCP and Malloy2. The magic of open source has brought the work of the great Kyle Nesbit with its Malloy Publisher (software to serve Malloy model over API) and Nick Amabile's MCP build for Malloy.

[Malloy] Publisher takes the semantic models defined in Malloy – models rich with business context and meaning – and exposes them through a server interface. This allows applications, AI agents, tools, and users to query your data consistently and reliably, leveraging the shared, unambiguous understanding defined in the Malloy model.

Just as “y = x + 2” allows for transformations impossible with prose descriptions, a semantic modeling language like Malloy allows us to express “revenue = sum(order_amount) - sum(discount)” as a reusable concept that carries consistent meaning across all queries. This isn't just about brevity - it's about creating manipulable building blocks that can be composed into increasingly sophisticated analytical expressions.

As per the image below, an MCP is one of the possible interfaces to interact with this now proper semantic layer.

Here are some afterthoughts after playing with these:

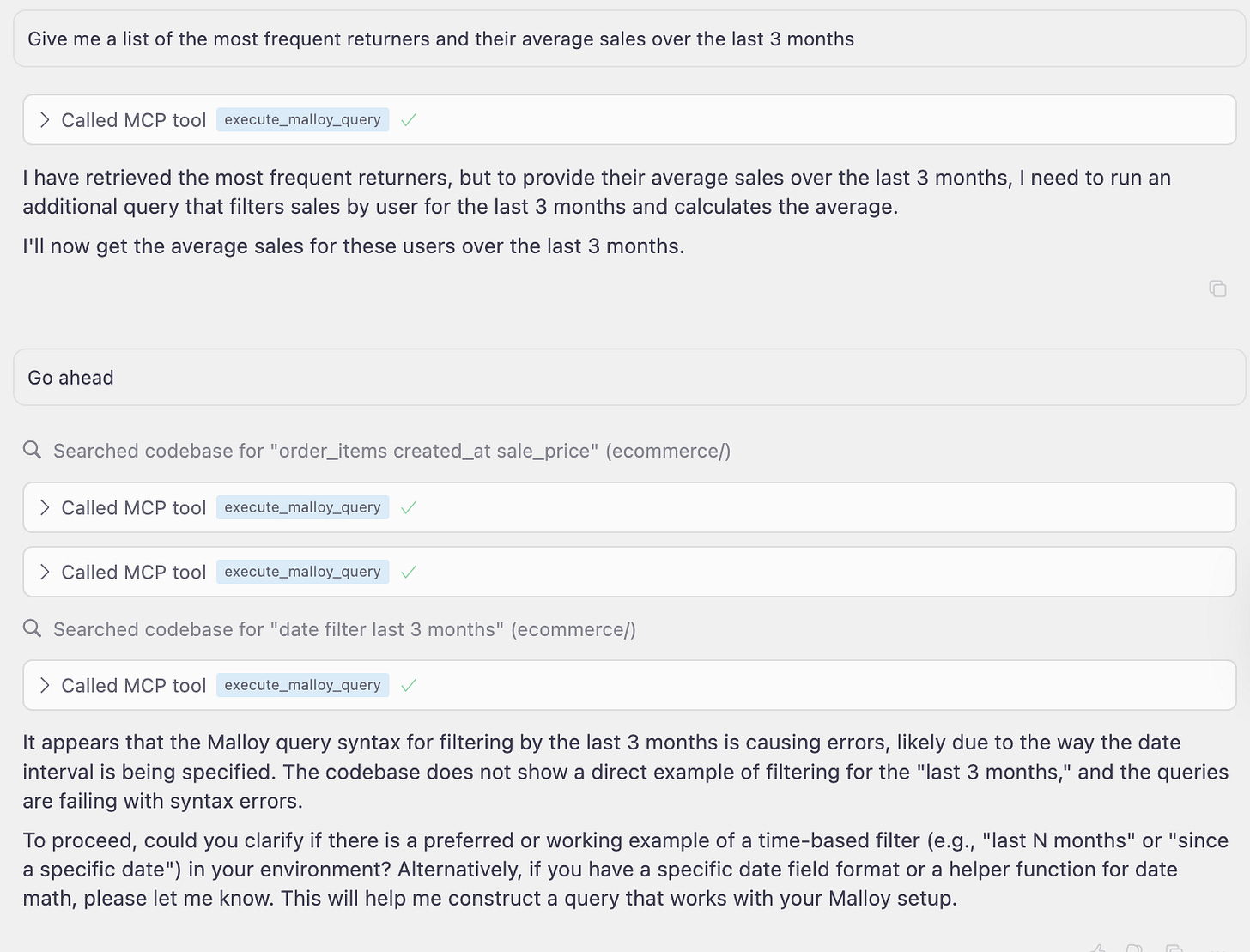

First-order questions dealing with direct data retrieval and simple aggregations ('What were total sales in March?') are fine, but second-order questions that require understanding relationships between concepts, business logic interpretations, or multi-step analysis ('How did customer retention correlate with our pricing changes across different segments?') typically fail. The latter requires not just data access but a semantic understanding of what the data represents in a business context.

For example, as the semantics for users and orders are properly defined, such prompt works well

But complex prompts challenge the model.

This might be due to poor prompting on my part, but it still seems like a fairly basic question that a human could easily answer by using a Malloy or SQL query directly.

These mixed results are not surprising. The MCP server wasn't extensively fed with Malloy query examples or explanations

It's likely that if we properly educate LLMs on Malloy's syntax and semantics, it could function as an ideal "text-to-SQL" framework, with that proper semantic layer built in Malloy in-between.

After all, the effectiveness of an LLM often hinges on the quality and relevance of its training data. We could argue that this applies to any semantic source, including SQL. So what makes Malloy special?

First-order questions can be handled by an LLM using SQL. The “text-to-SQL”. But when we move to second-order complexity, asking good questions and getting reliable answers absolutely depends on having good underlying semantics.

Like, how do we address situations where the LLM misinterprets business logic or incorrectly assumes relationships within the data? We will again have to "teach" the system about our specific data semantics... And SQL isn't designed for such. We somehow need proper formalism for our business theory.

Context is the new frontend; backend?

In traditional systems, we separate frontend (what users see and interact with) from backend (where data lives and logic executes).

On our side, the semantic understanding of data is a coevolutionary relationship between the frontend we interact with through natural language and the backend that ensures query accuracy and consistency.

The frontend not really new: we’ve been asking dashboard with natural language for years now. But now, we are about to fit the backend.

What were the weather conditions in Paris regarding temperature, winds and visibility on daily-average over the last month?

source: weather is duckdb.table(“weather.parquet”) extend {

measure:

avg_temperature is temperature.avg()

avg_wind_speed is wind_speed.avg()

avg_visibility is visibility.avg()

view: weather_paris is {

group_by: date_.day

aggregate:

avg_temperature

avg_wind_speed

avg_visibility

where: location = ‘Paris’

}

}

if you need a fresher on Malloy: