"Improving your business is about more acquisition and less churn."

How can I improve the business?

“Among the February cohort of new users, 67% of them churned after 3 days"

What's the churn rate after 3 days in February?

"Users are churning because there's an issue when they try to invite a new user to their organization."

Why are users churning after 3 days of usage?

"Here is the code I deployed to production to fix the churn issue: </....>"

What code did you deployed to fix the churn issue in production?

In many applications, users can audit logs. I.e., admins can see what happened, revise, and restore changes.

That's one of the main reasons the declarative-dom emerged, and why it sticks.

We're not clicking here and there, we declare our actions. Ultimately, we store and we version control them.

The new trendy interface - MCP - echoes that concept.

Now actions are taken out of our writing or talking.

It's not only about the past anymore.

Manuscripts, printing, computing: it's all about acknowledgment from the present that we will retrieve in the future.

But MCP breaks that pattern now.

It's now about action. Present action.

I'm writing what I want the computer to do. Right now.

We could fool ourselves and call it a day.

But we still need language. Old ones, common ones, and new ones.

Recently, I've been playing with MCP. First with Kestra where I've been able to control my instance only with natural language, second with Malloy, where I got closer to the true "text-to-SQL" motion I've been talking lately.

And again, the choice of interface is probed.

For example, it wouldn’t be optimal to use natural language for keeping track of orders made by a customer.

Rows and columns are handy here.

However, asking my computer to store my last customer order rather than writing an INSERT INTO statement or clicking in some interface sounds way easier.

A graphical interface is built because we need to persist actions.

But now, as action can be declared, these "graphical" interfaces don't provide as much value for taking action.

Ultimately, our native language feels natural because we can easily say nonsense without realizing it. Natural language is very useful because it fades.

This is the real value of MCP. Writing logs of actions and decisions without realizing it.

I don't know if reverse MCP server is already a thing, but I can see a world where we would like to play Jeopardy for real.

To audit the action taken.

To imagine a world and play backward thinking.

To probe ourselves.

📡 Expected Contents

Mold and Rust Logic

I've recently been reflecting on how data is dust. On why software only moves forward, and why ephemeral software should decay and lose data.

In the physical world, our artifacts fade - we put them in the trash. In the digital, they kinda stick forever.

Here is a nice post reflecting on rust and mold logics:

Rust logic’s central tension is preventative maintenance vs. planned obsolescence.

Mold logic’s central tension is calculated sanitation vs. managed overgrowth.

Malloy again

Yes, I'm here again with Malloy. I'm not a prophet and don't know if it will stick in the long term. But this project keeps triggering me. It has a deep promise about building a semantic layer that looks like mathematics to me.

If you need a fresher on it, here is a nice discussion where Lloyd Tabb presents Malloy and goes deeper into it (the index part is thrilling!).

You probably didn't miss it: everyone is around MCP now. Malloy doesn't deviate the rule. With the great Malloy Publisher that takes the semantic models defined in Malloy and exposes them through a server interface, it was natural to see a dedicated MCP for it.

I put down some words on what could be the implications of Malloy on analytics in the blog post section later on this issue.

On the foolishness of "natural language programming".

We are sticking up for LLMs, and that's cool.

I wonder, what if you did the opposite? Take a project of moderate complexity and convert it from code back to natural language using your favorite LLM. Does it provide you with a reasonable description of the behavior and requirements encoded in the source code without losing enough detail to recreate the program? Do you find the resulting natural language description is easier to reason about?

I think there's a reason most of the vibe-coded applications we see people demonstrate are rather simple. There is a level of complexity and precision that is hard to manage. Sure, you can define it in plain english, but is the resulting description extensible, understandable, or more descriptive than a precise language? I think there is a reason why legalese is not plain English, and it goes beyond mere gatekeeping.

From one gut feeling I derive much consolation: I suspect that machines to be programmed in our native tongues —be it Dutch, English, American, French, German, or Swahili— are as damned difficult to make as they would be to use.

Same for maths and coding - once you reach a certain level of expertise, the complexity and redundancy of natural language is a greater cost than benefit. This seems to apply to all fields of expertise.

Here is a great post discussing natural language programming, from 2010... I think we can move on.

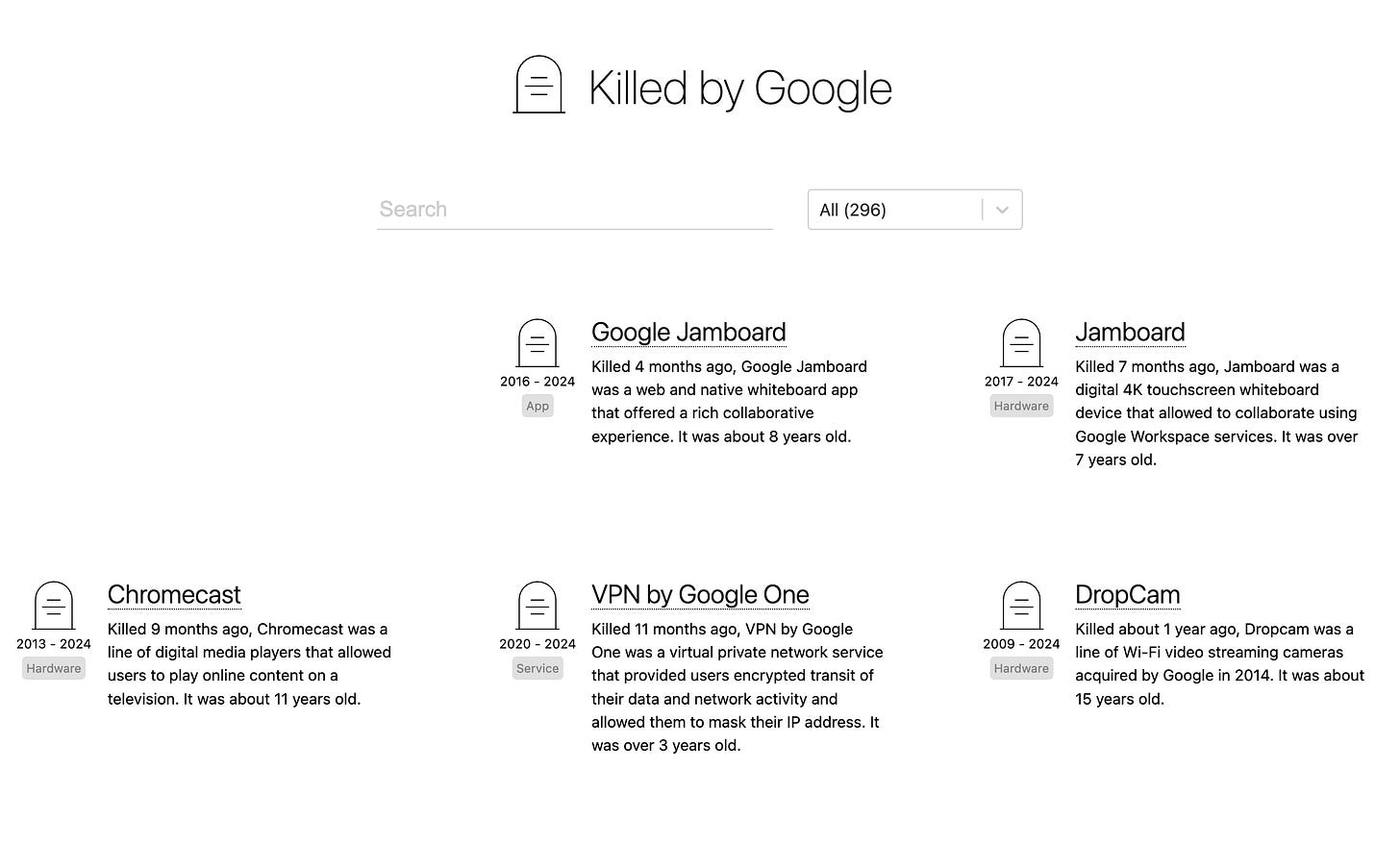

Killed by Google

A reminder that even Google throws away its projects. It's also a reminder that creating projects is like VC: only one of many will cover your investment. So, be wide, ready to throw away stuff, but ultimately reach your (time) investments thanks to only a few projects.

📰 The Blog Post

What Syntax for the Semantic Layer? I'm sticking to my conclusion for now, but recent developments in Malloy makes me think we're on the path for something great.

Here are some reflections on recent tests and vibe-coding sessions using Malloy.

The Implication of Malloy in Analytics

In the 1960's, aviation organizations called for a uniform system to understand and communicate weather conditions. This led to the development of METAR, a basic code format that can be transmitted quickly and understood universally.

🎨 Beyond The Bracket

In April, I went through the Channel Tunnel from Paris to Dover by car. From there, I drove northward, winding my way through the English countryside toward Matlock, nestled in the rolling hills of Derbyshire in the heart of the Midlands.

It was the first time I had to drive on the left. I anticipate the change to be more difficult than it actually proves to be in practice.

Maybe my neuroplastic is still in good shape 😅

The Roman thermal in Bath reminded me that the Romans actually went up to England from AD 43 to AD 410.

Archaeological evidence suggests the Romans drove their carts and wagons on the left side of the road, a practice they likely brought to Britain during their occupation1

This wasn't arbitrary: in medieval times, most people were right-handed, and traveling on the left side of the road allowed knights and travelers to keep their dominant sword-hand free to defend against potential attackers approaching from the opposite direction.

In France and North America, the shift toward right-side driving was influenced by the use of large freight wagons pulled by multiple horses. Unlike British carriages, these wagons had no driver's seat-instead, the driver sat on the left rear horse to keep their whip hand (right hand) free. From this position, drivers had better visibility when passing other wagons on the right, gradually establishing this as standard practice.

Before this, the custom was for the wealthier classes - who travelled by carriages and horseback - to drive on the left side of the road. The poor people did not have these luxuries, so they walked, and these pedestrians were relegated to the right side.

During the French Revolution, the government under Maximilien Robespierre mandated driving on the right as an egalitarian measure, erasing the class distinction that had previously reserved the left side for the aristocracy and wealthy classes. Napoleon subsequently enforced this practice throughout territories under French control, spreading right-hand traffic across continental Europe

Back in France after eleven days, I didn't had any issue driving back to the right.

Sounds like we often anticipate the change to be more difficult than it actually proves to be in practice.

That also suggests that with proper preparation and awareness, adaptation can occur

relatively quickly...

Ohh there's sun out there! Truly, I can't make that stuff up: sun, good food, friends, love, family, book reading, walk, sleep...

Time off abroad, visits, walks, and talks got me off the current nihilism happening in tech.

But I'm still wondering how our work and life are evolving. Being focused on product management, i.e. engineering cycle, UX issues, customer feedback and marketing (and still a foot in data) I can see tons of things I would like to automate. At least, willing too.

But each time I’m on the verge to automate something worth my time, it hits a dead end... Like, it sounds rubbish, non-human, robotics. I also fear it's not good work.

This is because models aren't that smart yet; but also because some of my work is inherintly human. Probing users, coordinating human work, priorization, design, etc. The more I deepen in a subject, the more a very good probing session is the only true valuable asset. The answer unfold logically then, it's cheap. But because of upstream taste, time, and questions2.

and don't make me wrong: LLMs are great partners to probe and find these.